Stack Overflow 架构(2016年版)

2022-07-22 tech troubleshooting architecture 484 mins 92 图 169423 字

Stack Overflow 这一系列的文章都很好,我挪过来做个备份。原文链接:

一、Stack Overflow: A Technical Deconstruction

One of the reasons I love working at Stack Overflow is we’re allowed encouraged to talk about almost anything out in the open. Except for things companies always keep private like financials and the nuclear launch codes, everything else is fair game. That’s an awesome thing that we haven’t taken advantage of on the technical side lately. I think it’s time for an experiment in extreme openness.

By sharing what we do (and I mean all of us), we better our world. Everyone that works at Stack shares at least one passion: improving life for all developers. Sharing how we do things is one of the best and biggest ways we can do that. It helps you. It helps me. It helps all of us.

When I tell you how we do <something>, a few things happen:

- You might learn something cool you didn’t know about.

- We might learn we’re doing it wrong.

- We’ll both find a better way, together…and we share that too.

- It helps eliminate the perception that “the big boys” always do it right. No, we screw up too.

There’s nothing to lose here and there’s no reason to keep things to yourself unless you’re afraid of being wrong. Good news: that’s not a problem. We get it wrong all the time anyway, so I’m not really worried about that one. Failure is always an option. The best any of us can do is live, learn, move on, and do it better next time.

Here’s where I need your help

I need you to tell me: what do you want to hear about? My intention is to get to a great many things, but it will take some time. What are people most interested in? How do I decide which topic to blog about next? The answer: I don’t know and I can’t decide. That’s where you come in. Please, tell me.

I put together this Trello board: Blog post queue for Stack Overflow topics

I’m also embedding it here for ease, hopefully this adds a lot of concreteness to the adventure:

It’s public. You can comment and vote on topics as well as suggest new topics either on the board itself or shoot me a tweet: @Nick_Craver. Please, help me out by simply voting for what you want to know so I can prioritize the queue. If you see a topic and have specific questions, please comment on the card so I make sure to answer it in the post.

The first post won’t be vote-driven. I think it has to be the architecture overview so all future references make sense. After that, I’ll go down the board and blog the highest-voted topic each time.

I’ve missed blogging due to spending my nights entirely in open source lately. I don’t believe that’s necessarily the best or only way for me to help developers. Having votes for topics gives me real motivation to dedicate the time to writing them up, pulling the stats, and making the pretty pictures. For that, I thank everyone participating.

If you’re curious about my writing style and what to expect, check out some of my previous posts:

- How we upgrade a live data center

- What it takes to run Stack Overflow

- Stackoverflow.com: the road to SSL

Am I crazy? Yep, probably - that’s already a lot of topics. But I think it’ll be fun and engaging. Let’s go on this adventure together.

二、Stack Overflow: The Architecture - 2016 Edition

This is #1 in a very long series of posts on Stack Overflow’s architecture. Welcome. Previous post (#0): Stack Overflow: A Technical Deconstruction Next post (#2): Stack Overflow: The Hardware - 2016 Edition

To get an idea of what all of this stuff “does,” let me start off with an update on the average day at Stack Overflow. So you can compare to the previous numbers from November 2013, here’s a day of statistics from February 9th, 2016 with differences since November 12th, 2013:

- 209,420,973 (+61,336,090) HTTP requests to our load balancer

- 66,294,789 (+30,199,477) of those were page loads

- 1,240,266,346,053 (+406,273,363,426) bytes (1.24 TB) of HTTP traffic sent

- 569,449,470,023 (+282,874,825,991) bytes (569 GB) total received

- 3,084,303,599,266 (+1,958,311,041,954) bytes (3.08 TB) total sent

- 504,816,843 (+170,244,740) SQL Queries (from HTTP requests alone)

- 5,831,683,114 (+5,418,818,063) Redis hits

- 17,158,874 (not tracked in 2013) Elastic searches

- 3,661,134 (+57,716) Tag Engine requests

- 607,073,066 (+48,848,481) ms (168 hours) spent running SQL queries

- 10,396,073 (-88,950,843) ms (2.8 hours) spent on Redis hits

- 147,018,571 (+14,634,512) ms (40.8 hours) spent on Tag Engine requests

- 1,609,944,301 (-1,118,232,744) ms (447 hours) spent processing in ASP.Net

- 22.71 (-5.29) ms average (19.12 ms in ASP.Net) for 49,180,275 question page renders

- 11.80 (-53.2) ms average (8.81 ms in ASP.Net) for 6,370,076 home page renders

You may be wondering about the drastic ASP.Net reduction in processing time compared to 2013 (which was 757 hours) despite 61 million more requests a day. That’s due to both a hardware upgrade in early 2015 as well as a lot of performance tuning inside the applications themselves. Please don’t forget: performance is still a feature. If you’re curious about more hardware specifics than I’m about to provide—fear not. The next post will be an appendix with detailed hardware specs for all of the servers that run the sites (I’ll update this with a link when it’s live).

So what’s changed in the last 2 years? Besides replacing some servers and network gear, not much. Here’s a top-level list of hardware that runs the sites today (noting what’s different since 2013):

- 4 Microsoft SQL Servers (new hardware for 2 of them)

- 11 IIS Web Servers (new hardware)

- 2 Redis Servers (new hardware)

- 3 Tag Engine servers (new hardware for 2 of the 3)

- 3 Elasticsearch servers (same)

- 4 HAProxy Load Balancers (added 2 to support CloudFlare)

- 2 Networks (each a Nexus 5596 Core + 2232TM Fabric Extenders, upgraded to 10Gbps everywhere)

- 2 Fortinet 800C Firewalls (replaced Cisco 5525-X ASAs)

- 2 Cisco ASR-1001 Routers (replaced Cisco 3945 Routers)

- 2 Cisco ASR-1001-x Routers (new!)

What do we need*** to run Stack Overflow? That hasn’t changed much since 2013, but due to the optimizations and new hardware mentioned above, we’re down to **needing* only 1 web server. We have unintentionally tested this, successfully, a few times. To be clear: I’m saying it works. I’m not saying it’s a good idea. It’s fun though, every time.

Now that we have some baseline numbers for an idea of scale, let’s see how we make those fancy web pages. Since few systems exist in complete isolation (and ours is no exception), architecture decisions often make far less sense without a bigger picture of how those pieces fit into the whole. That’s the goal here, to cover the big picture. Many subsequent posts will do deep dives into specific areas. This will be a logistical overview with hardware highlights only; the next post will have the hardware details.

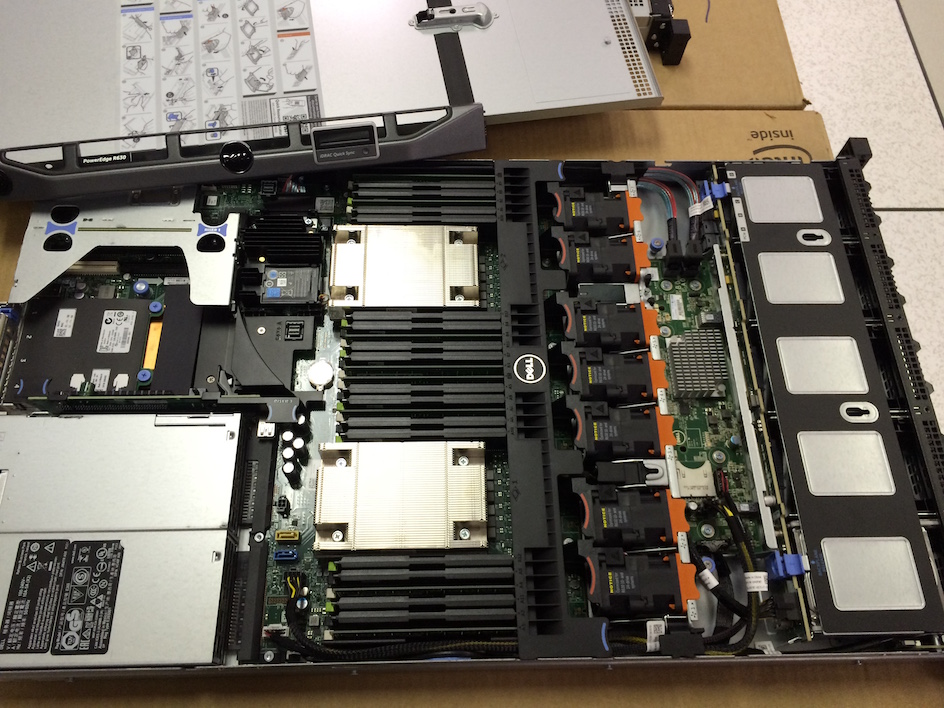

For those of you here to see what the hardware looks like these days, here are a few pictures I took of rack A (it has a matching sister rack B) during our February 2015 upgrade:

…and if you’re into that kind of thing, here’s the entire 256 image album from that week (you’re damn right that number’s intentional). Now, let’s dig into layout. Here’s a logical overview of the major systems in play:

Ground Rules

Here are some rules that apply globally so I don’t have to repeat them with every setup:

- Everything is redundant.

- All servers and network gear have at least 2x 10Gbps connectivity.

- All servers have 2 power feeds via 2 power supplies from 2 UPS units backed by 2 generators and 2 utility feeds.

- All servers have a redundant partner between rack A and B.

- All servers and services are doubly redundant via another data center (Colorado), though I’m mostly talking about New York here.

- Everything is redundant.

The Internets

First, you have to find us—that’s DNS. Finding us needs to be fast, so we farm this out to CloudFlare (currently) because they have DNS servers nearer to almost everyone around the world. We update our DNS records via an API and they do the “hosting” of DNS. But since we’re jerks with deeply-rooted trust issues, we still have our own DNS servers as well. Should the apocalypse happen (probably caused by the GPL, Punyon, or caching) and people still want to program to take their mind off of it, we’ll flip them on.

After you find our secret hideout, HTTP traffic comes from one of our four ISPs (Level 3, Zayo, Cogent, and Lightower in New York) and flows through one of our four edge routers. We peer with our ISPs using BGP (fairly standard) in order to control the flow of traffic and provide several avenues for traffic to reach us most efficiently. These ASR-1001 and ASR-1001-X routers are in 2 pairs, each servicing 2 ISPs in active/active fashion—so we’re redundant here. Though they’re all on the same physical 10Gbps network, external traffic is in separate isolated external VLANs which the load balancers are connected to as well. After flowing through the routers, you’re headed for a load balancer.

I suppose this may be a good time to mention we have a 10Gbps MPLS between our 2 data centers, but it is not directly involved in serving the sites. We use this for data replication and quick recovery in the cases where we need a burst. “But Nick, that’s not redundant!” Well, you’re technically correct (the best kind of correct), that’s a single point of failure on its face. But wait! We maintain 2 more failover OSPF routes (the MPLS is #1, these are #2 and 3 by cost) via our ISPs. Each of the sets mentioned earlier connects to the corresponding set in Colorado, and they load balance traffic between in the failover situation. We could make both sets connect to both sets and have 4 paths but, well, whatever. Moving on.

Load Balancers (HAProxy)

The load balancers are running HAProxy 1.5.15 on CentOS 7, our preferred flavor of Linux. TLS (SSL) traffic is also terminated in HAProxy. We’ll be looking hard at HAProxy 1.7 soon for HTTP/2 support.

Unlike all other servers with a dual 10Gbps LACP network link, each load balancer has 2 pairs of 10Gbps: one for the external network and one for the DMZ. These boxes run 64GB or more of memory to more efficiently handle SSL negotiation. When we can cache more TLS sessions in memory for reuse, there’s less to recompute on subsequent connections to the same client. This means we can resume sessions both faster and cheaper. Given that RAM is pretty cheap dollar-wise, it’s an easy choice.

The load balancers themselves are a pretty simple setup. We listen to different sites on various IPs (mostly for certificate concerns and DNS management) and route to various backends based mostly on the host header. The only things of note we do here is rate limiting and some header captures (sent from our web tier) into the HAProxy syslog message so we can record performance metrics for every single request. We’ll cover that later too.

Web Tier (IIS 8.5, ASP.Net MVC 5.2.3, and .Net 4.6.1)

The load balancers feed traffic to 9 servers we refer to as “primary” (01-09) and 2 “dev/meta” (10-11, our staging environment) web servers. The primary servers run things like Stack Overflow, Careers, and all Stack Exchange sites except meta.stackoverflow.com and meta.stackexchange.com, which run on the last 2 servers. The primary Q&A Application itself is multi-tenant. This means that a single application serves the requests for all Q&A sites. Put another way: we can run the entire Q&A network off of a single application pool on a single server. Other applications like Careers, API v2, Mobile API, etc. are separate. Here’s what the primary and dev tiers look like in IIS:

Here’s what Stack Overflow’s distribution across the web tier looks like in Opserver (our internal monitoring dashboard):

…and here’s what those web servers look like from a utilization perspective:

I’ll go into why we’re so overprovisioned in future posts, but the highlight items are: rolling builds, headroom, and redundancy.

Service Tier (IIS, ASP.Net MVC 5.2.3, .Net 4.6.1, and HTTP.SYS)

Behind those web servers is the very similar “service tier.” It’s also running IIS 8.5 on Windows 2012R2. This tier runs internal services to support the production web tier and other internal systems. The two big players here are “Stack Server” which runs the tag engine and is based on http.sys (not behind IIS) and the Providence API (IIS-based). Fun fact: I have to set affinity on each of these 2 processes to land on separate sockets because Stack Server just steamrolls the L2 and L3 cache when refreshing question lists on a 2-minute interval.

These service boxes do heavy lifting with the tag engine and backend APIs where we need redundancy, but not 9x redundancy. For example, loading all of the posts and their tags that change every n minutes from the database (currently 2) isn’t that cheap. We don’t want to do that load 9 times on the web tier; 3 times is enough and gives us enough safety. We also configure these boxes differently on the hardware side to be better optimized for the different computational load characteristics of the tag engine and elastic indexing jobs (which also run here). The “tag engine” is a relatively complicated topic in itself and will be a dedicated post. The basics are: when you visit /questions/tagged/java, you’re hitting the tag engine to see which questions match. It does all of our tag matching outside of /search, so the new navigation, etc. are all using this service for data.

Cache & Pub/Sub (Redis)

We use Redis for a few things here and it’s rock solid. Despite doing about 160 billion ops a month, every instance is below 2% CPU. Usually much lower:

We have an L1/L2 cache system with Redis. “L1” is HTTP Cache on the web servers or whatever application is in play. “L2” is falling back to Redis and fetching the value out. Our values are stored in the Protobuf format, via protobuf-dot-net by Marc Gravell. For a client, we’re using StackExchange.Redis—written in-house and open source. When one web server gets a cache miss in both L1 and L2, it fetches the value from source (a database query, API call, etc.) and puts the result in both local cache and Redis. The next server wanting the value may miss L1, but would find the value in L2/Redis, saving a database query or API call.

We also run many Q&A sites, so each site has its own L1/L2 caching: by key prefix in L1 and by database ID in L2/Redis. We’ll go deeper on this in a future post.

Alongside the 2 main Redis servers (master/slave) that run all the site instances, we also have a machine learning instance slaved across 2 more dedicated servers (due to memory). This is used for recommending questions on the home page, better matching to jobs, etc. It’s a platform called Providence, covered by Kevin Montrose here.

The main Redis servers have 256GB of RAM (about 90GB in use) and the Providence servers have 384GB of RAM (about 125GB in use).

Redis isn’t just for cache though, it also has a publish & subscriber mechanism where one server can publish a message and all other subscribers receive it—including downstream clients on Redis slaves. We use this mechanism to clear L1 caches on other servers when one web server does a removal for consistency, but there’s another great use: websockets.

Websockets (https://github.com/StackExchange/NetGain)

We use websockets to push real-time updates to users such as notifications in the top bar, vote counts, new nav counts, new answers and comments, and a few other bits.

The socket servers themselves are using raw sockets running on the web tier. It’s a very thin application on top of our open source library: StackExchange.NetGain. During peak, we have about 500,000 concurrent websocket connections open. That’s a lot of browsers. Fun fact: some of those browsers have been open for over 18 months. We’re not sure why. Someone should go check if those developers are still alive. Here’s what this week’s concurrent websocket pattern looks like:

Why websockets? They’re tremendously more efficient than polling at our scale. We can simply push more data with fewer resources this way, while being more instant to the user. They’re not without issues though—ephemeral port and file handle exhaustion on the load balancer are fun issues we’ll cover later.

Search (Elasticsearch)

Spoiler: there’s not a lot to get excited about here. The web tier is doing pretty vanilla searches against Elasticsearch 1.4, using the very slim high-performance StackExchange.Elastic client. Unlike most things, we have no plans to open source this simply because it only exposes a very slim subset of the API we use. I strongly believe releasing it would do more harm than good with developer confusion. We’re using elastic for /search, calculating related questions, and suggestions when asking a question.

Each Elastic cluster (there’s one in each data center) has 3 nodes, and each site has its own index. Careers has an additional few indexes. What makes our setup a little non-standard in the elastic world: our 3 server clusters are a bit beefier than average with all SSD storage, 192GB of RAM, and dual 10Gbps network each.

The same application domains (yeah, we’re screwed with .Net Core here…) in Stack Server that host the tag engine also continually index items in Elasticsearch. We do some simple tricks here such as ROWVERSION in SQL Server (the data source) compared against a “last position” document in Elastic. Since it behaves like a sequence, we can simply grab and index any items that have changed since the last pass.

The main reason we’re on Elasticsearch instead of something like SQL full-text search is scalability and better allocation of money. SQL CPUs are comparatively very expensive, Elastic is cheap and has far more features these days. Why not Solr? We want to search across the entire network (many indexes at once), and this wasn’t supported at decision time. The reason we’re not on 2.x yet is a major change to “types” means we need to reindex everything to upgrade. I just don’t have enough time to make the needed changes and migration plan yet.

Databases (SQL Server)

We’re using SQL Server as our single source of truth. All data in Elastic and Redis comes from SQL Server. We run 2 SQL Server clusters with AlwaysOn Availability Groups. Each of these clusters has 1 master (taking almost all of the load) and 1 replica in New York. Additionally, they have 1 replica in Colorado (our DR data center). All replicas are asynchronous.

The first cluster is a set of Dell R720xd servers, each with 384GB of RAM, 4TB of PCIe SSD space, and 2x 12 cores. It hosts the Stack Overflow, Sites (bad name, I’ll explain later), PRIZM, and Mobile databases.

The second cluster is a set of Dell R730xd servers, each with 768GB of RAM, 6TB of PCIe SSD space, and 2x 8 cores. This cluster runs everything else. That list includes Talent, Open ID, Chat, our Exception log, and every other Q&A site (e.g. Super User, Server Fault, etc.).

CPU utilization on the database tier is something we like to keep very low, but it’s actually a little high at the moment due to some plan cache issues we’re addressing. As of right now, NY-SQL02 and 04 are masters, 01 and 03 are replicas we just restarted today during some SSD upgrades. Here’s what the past 24 hours looks like:

Our usage of SQL is pretty simple. Simple is fast. Though some queries can be crazy, our interaction with SQL itself is fairly vanilla. We have some legacy Linq2Sql, but all new development is using Dapper, our open source Micro-ORM using POCOs. Let me put this another way: Stack Overflow has only 1 stored procedure in the database and I intend to move that last vestige into code.

Libraries

Okay, let’s change gears to something that can more directly help you. I’ve mentioned a few of these up above, but I’ll provide a list here of many open-source .Net libraries we maintain for the world to use. We open sourced them because they have no core business value but can help the world of developers. I hope you find these useful today:

- Dapper (.Net Core) - High-performance Micro-ORM for ADO.Net

- StackExchange.Redis - High-performance Redis client

- MiniProfiler - Lightweight profiler we run on every page (also supports Ruby, Go, and Node)

- Exceptional - Error logger for SQL, JSON, MySQL, etc.

- Jil - High-performance JSON (de)serializer

- Sigil - A .Net CIL generation helper (for when C# isn’t fast enough)

- NetGain - High-performance websocket server

- Opserver - Monitoring dashboard polling most systems directly and feeding from Orion, Bosun, or WMI as well.

- Bosun - Backend monitoring system, written in Go

Next up is a detailed current hardware list of what runs our code. After that, we go down the list. Stay tuned.

三、Stack Overflow: The Hardware - 2016 Edition

This is #2 in a very long series of posts on Stack Overflow’s architecture. Previous post (#1): Stack Overflow: The Architecture - 2016 Edition Next post (#3): Stack Overflow: How We Do Deployment - 2016 Edition

Who loves hardware? Well, I do and this is my blog so I win. If you don’t love hardware then I’d go ahead and close the browser.

Still here? Awesome. Or your browser is crazy slow, in which case you should think about some new hardware.

I’ve repeated many, many times: performance is a feature. Since your code is only as fast as the hardware it runs on, the hardware definitely matters. Just like any other platform, Stack Overflow’s architecture comes in layers. Hardware is the foundation layer for us, and having it in-house affords us many luxuries not available in other scenarios…like running on someone else’s servers. It also comes with direct and indirect costs. But that’s not the point of this post, that comparison will come later. For now, I want to provide a detailed inventory of our infrastructure for reference and comparison purposes. And pictures of servers. Sometimes naked servers. This web page could have loaded much faster, but I couldn’t help myself.

In many posts through this series I will give a lot of numbers and specs. When I say “our SQL server utilization is almost always at 5–10% CPU,” well, that’s great. But, 5–10% of what? That’s when we need a point of reference. This hardware list is meant to both answer those questions and serve as a source for comparison when looking at other platforms and what utilization may look like there, how much capacity to compare to, etc.

How We Do Hardware

Disclaimer: I don’t do this alone. George Beech (@GABeech) is my main partner in crime when speccing hardware here at Stack. We carefully spec out each server for its intended purpose. What we don’t do is order in bulk and assign tasks later. We’re not alone in this process though; you have to know what’s going to run on the hardware to spec it optimally. We’ll work with the developer(s) and/or other site reliability engineers to best accommodate what is intended live on the box.

We’re also looking at what’s best in the system. Each server is not an island. How it fits into the overall architecture is definitely a consideration. What services can share this platform? This data store? This log system? There is inherent value in managing fewer things, or at least fewer variations of anything.

When we spec out our hardware, we look at a myriad of requirements that help determine what to order. I’ve never really written this mental checklist down, so let’s give it a shot:

- Is this a scale up or scale out problem? (Are we buying one bigger machine or a few smaller ones?)

- How much redundancy do we need/want? (How much headroom and failover capability?)

- Storage:

- Will this server/application touch disk? (Do we need anything besides the spinny OS drives?)

- If so, how much? (How much bandwidth? How many small files? Does it need SSDs?)

- If SSDs, what’s the write load? (Are we talking Intel S3500/3700s? P360x? P3700s?)

- How much SSD capacity do we need? (And should it be a 2-tier solution with HDDs as well?)

- Is this data totally transient? (Are SSDs without capacitors, which are far cheaper, a better fit?)

- Will the storage needs likely expand? (Do we get a 1U/10-bay server or a 2U/26-bay server?)

- Is this a data warehouse type scenario? (Are we looking at 3.5” drives? If so, in a 12 or 16 drives per 2U chassis?)

- Is the storage trade-off for the 3.5” backplane worth the 120W TDP limit on processing?

- Do we need to expose the disks directly? (Does the controller need to support pass-through?)

- Will this server/application touch disk? (Do we need anything besides the spinny OS drives?)

- Memory:

- How much memory does it need? (What must we buy?)

- How much memory could it use? (What’s reasonable to buy?)

- Do we think it will need more memory later? (What memory channel configuration should we go with?)

- Is this a memory-access-heavy application? (Do we want to max out the clock speed?)

- Is it highly parallel access? (Do we want to spread the same space across more DIMMs?)

- CPU:

- What kind of processing are we looking at? (Do we need base CPUs or power?)

- Is it heavily parallel? (Do we want fewer, faster cores? Or, does it call for more, slower cores?)

- In what ways? Will there be heavy L2/L3 cache contention? (Do we need a huge L3 cache for performance?)

- Is it mostly single core performance? (Do we want maximum clock?)

- If so, how many processes at once? (Which turbo spread do we want here?)

- Network:

- Do we need additional 10Gb network connectivity? (Is this a “through” machine, such as a load balancer?)

- How much balance do we need on Tx/Rx buffers? (What CPU core count balances best?)

- Redundancy:

- Do we need servers in the DR data center as well?

- Do we need the same number, or is less redundancy acceptable?

- Do we need servers in the DR data center as well?

- Do we need a power cord? No. No we don’t.

Now, let’s see what hardware in our New York QTS data center serves the sites. Secretly, it’s really New Jersey, but let’s just keep that between us. Why do we say it’s the NY data center? Because we don’t want to rename all those NY- servers. I’ll note in the list below when and how Denver differs slightly in specs or redundancy levels.

Hide Pictures (in case you’re using this as a hardware reference list later)

Servers Running Stack Overflow & Stack Exchange Sites

A few global truths so I need not repeat them in each server spec below:

- OS drives are not included unless they’re special. Most servers use a pair of 250 or 500GB SATA HDDs for the OS partition, always in a RAID 1. Boot time is not a concern we have and even if it were, the vast majority of our boot time on any physical server isn’t dependent on drive speed (for example, checking 768GB of memory).

- All servers are connected by 2 or more 10Gb network links in active/active LACP.

- All servers run on 208V single phase power (via 2 PSUs feeding from 2 PDUs backed by 2 sources).

- All servers in New York have cable arms, all servers in Denver do not (local engineer’s preference).

- All servers have both an iDRAC connection (via the management network) and a KVM connection.

Network

- 2x Cisco Nexus 5596UP core switches (96 SFP+ ports each at 10 Gbps)

- 10x Cisco Nexus 2232TM Fabric Extenders (2 per rack - each has 32 BASE-T ports each at 10Gbps + 8 SFP+ 10Gbps uplinks)

- 2x Fortinet 800C Firewalls

- 2x Cisco ASR-1001 Routers

- 2x Cisco ASR-1001-x Routers

- 6x Cisco 2960S-48TS-L Management network switches (1 Per Rack - 48 1Gbps ports + 4 SFP 1Gbps)

- 1x Dell DMPU4032 KVM

- 7x Dell DAV2216 KVM Aggregators (1–2 per rack - each uplinks to the DPMU4032)

Note: Each FEX has 80 Gbps of uplink bandwidth to its core, and the cores have a 160 Gbps port channel between them. Due to being a more recent install, the hardware in our Denver data center is slightly newer. All 4 routers are ASR-1001-x models and the 2 cores are Cisco Nexus 56128P, which have 96 SFP+ 10Gbps ports and 8 QSFP+ 40Gbps ports each. This saves 10Gbps ports for future expansion since we can bond the cores with 4x 40Gbps links, instead of eating 16x 10Gbps ports as we do in New York.

Here’s what the network gear looks like in New York:

…and in Denver:

Give a shout to Mark Henderson, one of our Site Reliability Engineers who made a special trip to the New York DC to get me some high-res, current photos for this post.

SQL Servers (Stack Overflow Cluster)

- 2 Dell R720xd Servers, each with:

- Dual E5-2697v2 Processors (12 cores @2.7–3.5GHz each)

- 384 GB of RAM (24x 16 GB DIMMs)

- 1x Intel P3608 4 TB NVMe PCIe SSD (RAID 0, 2 controllers per card)

- 24x Intel 710 200 GB SATA SSDs (RAID 10)

- Dual 10 Gbps network (Intel X540/I350 NDC)

SQL Servers (Stack Exchange “…and everything else” Cluster)

- 2 Dell R730xd Servers, each with:

- Dual E5-2667v3 Processors (8 cores @3.2–3.6GHz each)

- 768 GB of RAM (24x 32 GB DIMMs)

- 3x Intel P3700 2 TB NVMe PCIe SSD (RAID 0)

- 24x 10K Spinny 1.2 TB SATA HDDs (RAID 10)

- Dual 10 Gbps network (Intel X540/I350 NDC)

Note: Denver SQL hardware is identical in spec, but there is only 1 SQL server for each corresponding pair in New York.

Here’s what the SQL Servers in New York looked like while getting their PCIe SSD upgrades in February:

Web Servers

- 11 Dell R630 Servers, each with:

- Dual E5-2690v3 Processors (12 cores @2.6–3.5GHz each)

- 64 GB of RAM (8x 8 GB DIMMs)

- 2x Intel 320 300GB SATA SSDs (RAID 1)

- Dual 10 Gbps network (Intel X540/I350 NDC)

Service Servers (Workers)

-

2 Dell

R630

Servers, each with:

- Dual E5-2643 v3 Processors (6 cores @3.4–3.7GHz each)

- 64 GB of RAM (8x 8 GB DIMMs)

-

1 Dell

R620

Server, with:

- Dual E5-2667 Processors (6 cores @2.9–3.5GHz each)

- 32 GB of RAM (8x 4 GB DIMMs)

-

2x Intel 320 300GB SATA SSDs (RAID 1)

-

Dual 10 Gbps network (Intel X540/I350 NDC)

Note: NY-SERVICE03 is still an R620, due to not being old enough for replacement at the same time. It will be upgraded later this year.

Redis Servers (Cache)

- 2 Dell R630 Servers, each with:

- Dual E5-2687W v3 Processors (10 cores @3.1–3.5GHz each)

- 256 GB of RAM (16x 16 GB DIMMs)

- 2x Intel 520 240GB SATA SSDs (RAID 1)

- Dual 10 Gbps network (Intel X540/I350 NDC)

Elasticsearch Servers (Search)

- 3 Dell R620 Servers, each with:

- Dual E5-2680 Processors (8 cores @2.7–3.5GHz each)

- 192 GB of RAM (12x 16 GB DIMMs)

- 2x Intel S3500 800GB SATA SSDs (RAID 1)

- Dual 10 Gbps network (Intel X540/I350 NDC)

HAProxy Servers (Load Balancers)

-

2 Dell

R620

Servers (CloudFlare Traffic), each with:

- Dual E5-2637 v2 Processors (4 cores @3.5–3.8GHz each)

- 192 GB of RAM (12x 16 GB DIMMs)

- 6x Seagate Constellation 7200RPM 1TB SATA HDDs (RAID 10) (Logs)

- Dual 10 Gbps network (Intel X540/I350 NDC) - Internal (DMZ) Traffic

- Dual 10 Gbps network (Intel X540) - External Traffic

-

2 Dell

R620

Servers (Direct Traffic), each with:

- Dual E5-2650 Processors (8 cores @2.0–2.8GHz each)

- 64 GB of RAM (4x 16 GB DIMMs)

- 2x Seagate Constellation 7200RPM 1TB SATA HDDs (RAID 10) (Logs)

- Dual 10 Gbps network (Intel X540/I350 NDC) - Internal (DMZ) Traffic

- Dual 10 Gbps network (Intel X540) - External Traffic

Note: These servers were ordered at different times and as a result, differ in spec. Also, the two CloudFlare load balancers have more memory for a memcached install (which we no longer run today) for CloudFlare’s Railgun.

The service, redis, search, and load balancer boxes above are all 1U servers in a stack. Here’s what that stack looks like in New York:

Servers for Other Bits

We have other servers not directly or indirectly involved in serving site traffic. These are either only tangentially related (e.g., domain controllers which are seldom used for application pool authentication and run as VMs) or are for nonessential purposes like monitoring, log storage, backups, etc.

Since this post is meant to be an appendix for many future posts in the series, I’m including all of the interesting “background” servers as well. This also lets me share more server porn with you, and who doesn’t love that?

VM Servers (VMWare, Currently)

-

2 Dell

FX2s

Blade Chassis, each with 2 of 4 blades populated

-

4 Dell

FC630

Blade Servers (2 per chassis), each with:

- Dual E5-2698 v3 Processors (16 cores @2.3–3.6GHz each)

- 768 GB of RAM (24x 32 GB DIMMs)

- 2x 16GB SD Cards (Hypervisor - no local storage)

-

Dual 4x 10 Gbps network (FX IOAs - BASET)

-

-

1 EqualLogic

PS6210X

iSCSI SAN

- 24x Dell 10K RPM 1.2TB SAS HDDs (RAID10)

- Dual 10Gb network (10-BASET)

-

1 EqualLogic

PS6110X

iSCSI SAN

- 24x Dell 10K RPM 900GB SAS HDDs (RAID10)

- Dual 10Gb network (SFP+)

There a few more noteworthy servers behind the scenes that aren’t VMs. These perform background tasks, help us troubleshoot with logging, store tons of data, etc.

Machine Learning Servers (Providence)

These servers are idle about 99% of the time, but do heavy lifting for a nightly processing job: refreshing Providence. They also serve as an inside-the-datacenter place to test new algorithms on large datasets.

- 2 Dell R620 Servers, each with:

- Dual E5-2697 v2 Processors (12 cores @2.7–3.5GHz each)

- 384 GB of RAM (24x 16 GB DIMMs)

- 4x Intel 530 480GB SATA SSDs (RAID 10)

- Dual 10 Gbps network (Intel X540/I350 NDC)

Machine Learning Redis Servers (Still Providence)

This is the redis data store for Providence. The usual setup is one master, one slave, and one instance used for testing the latest version of our ML algorithms. While not used to serve the Q&A sites, this data is used when serving job matches on Careers as well as the sidebar job listings.

- 3 Dell R720xd Servers, each with:

- Dual E5-2650 v2 Processors (8 cores @2.6–3.4GHz each)

- 384 GB of RAM (24x 16 GB DIMMs)

- 4x Samsung 840 Pro 480 GB SATA SSDs (RAID 10)

- Dual 10 Gbps network (Intel X540/I350 NDC)

Logstash Servers (For ya know…logs)

Our Logstash cluster (using Elasticsearch for storage) stores logs from, well, everything. We plan to replicate HTTP logs in here but are hitting performance issues. However, we do aggregate all network device logs, syslogs, and Windows and Linux system logs here so we can get a network overview or search for issues very quickly. This is also used as a data source in Bosun to get additional information when alerts fire. The total cluster’s raw storage is 6x12x4 = 288 TB.

- 6 Dell R720xd Servers, each with:

- Dual E5-2660 v2 Processors (10 cores @2.2–3.0GHz each)

- 192 GB of RAM (12x 16 GB DIMMs)

- 12x 7200 RPM Spinny 4 TB SATA HDDs (RAID 0 x3 - 4 drives per)

- Dual 10 Gbps network (Intel X540/I350 NDC)

HTTP Logging SQL Server

This is where we log every single HTTP hit to our load balancers (sent from HAProxy via syslog) to a SQL database. We only record a few top level bits like URL, Query, UserAgent, timings for SQL, Redis, etc. in here – so it all goes into a Clustered Columnstore Index per day. We use this for troubleshooting user issues, detecting botnets, etc.

- 1 Dell R730xd Server with:

- Dual E5-2660 v3 Processors (10 cores @2.6–3.3GHz each)

- 256 GB of RAM (16x 16 GB DIMMs)

- 2x Intel P3600 2 TB NVMe PCIe SSD (RAID 0)

- 16x Seagate ST6000NM0024 7200RPM Spinny 6 TB SATA HDDs (RAID 10)

- Dual 10 Gbps network (Intel X540/I350 NDC)

Development SQL Server

We like for dev to simulate production as much as possible, so SQL matches as well…or at least it used to. We’ve upgraded production processors since this purchase. We’ll be refreshing this box with a 2U solution at the same time as we upgrade the Stack Overflow cluster later this year.

- 1 Dell R620 Server with:

- Dual E5-2620 Processors (6 cores @2.0–2.5GHz each)

- 384 GB of RAM (24x 16 GB DIMMs)

- 8x Intel S3700 800 GB SATA SSDs (RAID 10)

- Dual 10 Gbps network (Intel X540/I350 NDC)

That’s it for the hardware actually serving the sites or that’s generally interesting. We, of course, have other servers for the background tasks such as logging, monitoring, backups, etc. If you’re especially curious about specs of any other systems, just ask in comments and I’m happy to detail them out. Here’s what the full setup looks like in New York as of a few weeks ago:

What’s next? The way this series works is I blog in order of what the community wants to know about most. Going by the Trello board, it looks like Deployment is the next most interesting topic. So next time expect to learn how code goes from a developers machine to production and everything involved along the way. I’ll cover database migrations, rolling builds, CI infrastructure, how our dev environment is set up, and share stats on all things deployment.

四、Stack Overflow: How We Do Deployment - 2016 Edition

This is #3 in a very long series of posts on Stack Overflow’s architecture. Previous post (#2): Stack Overflow: The Hardware - 2016 Edition

We’ve talked about Stack Overflow’s architecture and the hardware behind it. The next most requested topic was Deployment. How do we get code a developer (or some random stranger) writes into production? Let’s break it down. Keep in mind that we’re talking about deploying Stack Overflow for the example, but most of our projects follow almost an identical pattern to deploy a website or a service.

I’m going ahead and inserting a set of section links here because this post got a bit long with all of the bits that need an explanation:

- Source & Context

- The Human Steps

- Branches

- Git On-Premises

- The Build System

- What’s In The Build?

- Tiers

- Database Migrations

- Localization/Translations (Moonspeak)

- Building Without Breaking

- Extra resources because I love you all

Source

This is our starting point for this article. We have the Stack Overflow repository on a developer’s machine. For the sake of discussing the process, let’s say they added a column to a database table and the corresponding property to the C# object — that way we can dig into how database migrations work along the way.

A Little Context

We deploy roughly 25 times per day to development (our CI build) just for Stack Overflow Q&A. Other projects also push many times. We deploy to production about 5-10 times on a typical day. A deploy from first push to full deploy is under 9 minutes (2:15 for dev, 2:40 for meta, and 3:20 for all sites). We have roughly 15 people pushing to the repository used in this post. The repo contains the code for these applications: Stack Overflow (every single Q&A site), stackexchange.com (root domain only), Stack Snippets (for Stack Overflow JavaScript snippets), Stack Auth (for OAuth), sstatic.net (cookieless CDN domain), Stack Exchange API v2, Stack Exchange Mobile (iOS and Android API), Stack Server (Tag Engine and Elasticsearch indexing Windows service), and Socket Server (our WebSocket Windows service).

The Human Steps

When we’re coding, if a database migration is involved then we have some extra steps. First, we check the chatroom (and confirm in the local repo) which SQL migration number is available next (we’ll get to how this works). Each project with a database has their own migration folder and number. For this deploy, we’re talking about the Q&A migrations folder, which applies to all Q&A databases. Here’s what chat and the local repo look like before we get started:

And here’s the local %Repo%\StackOverflow.Migrations\ folder:

You can see both in chat and locally that 726 was the last migration number taken. So we’ll issue a “taking 727 - Putting JSON in SQL to see who it offends” message in chat. This will claim the next migration so that we don’t collide with someone else also doing a migration. We just type a chat message, a bot pins it. Fun fact: it also pins when I say “taking web 2 offline”, but we think it’s funny and refuse to fix it. Here’s our little Pinbot trolling:

Now let’s add some code — we’ll keep it simple here:

A \StackOverflow\Models\User.cs diff:

+ public string PreferencesJson { get; set; }

And our new \StackOverflow.Migrations\727 - Putting JSON in SQL to see who it offends.sql:

If dbo.fnColumnExists('Users', 'PreferencesJson') = 0

Begin

Alter Table Users Add PreferencesJson nvarchar(max);

End

We’ve tested the migration works by running it against our local Q&A database of choice in SSMS and that the code on top of it works. Before deploying though, we need to make sure it runs as a migration. For example, sometimes you may forget to put a GO separating something that must be the first or only operation in a batch such as creating a view. So, we test it in the runner. To do this, we run the migrate.local.bat you see in the screenshot above. The contents are simple:

..\Build\Migrator-Fast --tier=local

--sites="Data Source=.;Initial Catalog=Sites.Database;Integrated Security=True" %*

PAUSE

Note: the migrator is a project, but we simply drop the .exe in the solutions using it, since that’s the simplest and most portable thing that works.

What does this migrator do? It hits our local copy of the Sites database. It contains a list of all the Q&A sites that developer runs locally and the migrator uses that list to connect and run all migrations against all databases, in Parallel. Here’s what a run looks like on a simple install with a single Q&A database:

So far, so good. We have code and a migration that works and code that does…some stuff (which isn’t relevant to this process). Now it’s time to take our little code baby and send it out into the world. It’s time to fly little code, be freeeeee! Okay now that we’re excited, the typical process is:

git add <files> (usually --all for small commits)

git commit -m "Migration 727: Putting JSON in SQL to see who it offends"

git pull --rebase

git push

Note: we first check our team chatroom to see if anyone is in the middle of a deploy. Since our deployments are pretty quick, the chances of this aren’t that big. But, given how often we deploy, collisions can and do happen. Then we yell at the designer responsible.

With respect to the Git commands above: if a command line works for you, use it. If a GUI works for you, use it. Use the best tooling for you and don’t give a damn what anyone else thinks. The entire point of tooling from an ancient hammer to a modern Git install is to save time and effort of the user. Use whatever saves you the most time and effort. Unless it’s Emacs, then consult a doctor immediately.

Branches

I didn’t cover branches above because compared to many teams, we very rarely use them. Most commits are on master. Generally, we branch for only one of a few reasons:

- A developer is new, and early on we want code reviews

- A developer is working on a big (or risky) feature and wants a one-off code review

- Several developers are working on a big feature

Other than the (generally rare) cases above, almost all commits are directly to master and deployed soon after. We don’t like a big build queue. This encourages us to make small to medium size commits often and deploy often. It’s just how we choose to operate. I’m not recommending it for most teams or any teams for that matter. Do what works for you. This is simply what works for us.

When we do branch, merging back in is always a topic people are interested in. In the vast majority of cases, we’ll squash when merging into master so that rolling back the changes is straightforward. We also keep the original branch around a few days (for anything major) to ensure we don’t need to reference what that specific change was about. That being said, we’re practical. If a squash presents a ton of developer time investment, then we just eat the merge history and go on with our lives.

Git On-Premises

Alright, so our code is sent to the server-side repo. Which repo? We’re currently using Gitlab for repositories. It’s pretty much GitHub, hosted on-prem. If Gitlab pricing keeps getting crazier (note: I said “crazier”, not “more expensive”), we’ll certainly re-evaluate GitHub Enterprise again.

Why on-prem for Git hosting? For the sake of argument, let’s say we used GitHub instead (we did evaluate this option). What’s the difference? First, builds are slower. While GitHub’s protocol implementation of Git is much faster, latency and bandwidth making the builds slower than pulling over 2x10Gb locally. But to be fair, GitHub is far faster than Gitlab at most operations (especially search and viewing large diffs).

However, depending on GitHub (or any offsite third party) has a few critical downsides for us. The main downside is the dependency chain. We aren’t just relying on GitHub servers to be online (their uptime is pretty good). We’re relying on them to be online and being able to get to them. For that matter, we’re also relying on all of our remote developers to be able to push code in the first place. That’s a lot of switching, routing, fiber, and DDoS surface area in-between us and the bare essentials needed to build: code. We can drastically shorten that dependency chain by being on a local server. It also alleviates most security concerns we have with any sensitive code being on a third-party server. We have no inside knowledge of any GitHub security issues or anything like that, we’re just extra careful with such things. Quite simply: if something doesn’t need to leave your network, the best security involves it not leaving your network.

All of that being said, our open source projects are hosted on GitHub and it works great. The critical ones are also mirrored internally on Gitlab for the same reasons as above. We have no issues with GitHub (they’re awesome), only the dependency chain. For those unaware, even this website is running on GitHub pages…so if you see a typo in this post, submit a PR.

The Build System

Once the code is in the repo, the continuous integration build takes over. This is just a fancy term for a build kicked off by a commit. For builds, we use TeamCity. The TeamCity server is actually on the same VM as Gitlab since neither is useful without the other and it makes TeamCity’s polling for changes a fast and cheap operation. Fun fact: since Linux has no built-in DNS caching, most of the DNS queries are looking for…itself. Oh wait, that’s not a fun fact — it’s actually a pain in the ass.

As you may have heard, we like to keep things really simple. We have extra compute capacity on our web tier, so…we use it. Builds for all of the websites run on agents right on the web tier itself, this means we have 11 build agents local to each data center. There are a few additional Windows and Linux build agents (for puppet, rpms, and internal applications) on other VMs, but they’re not relevant to this deploy process.

Like most CI builds, we simply poll the Git repo on an interval to see if there are changes. This repo is heavy hit, so we poll for changes every 15 seconds. We don’t like waiting. Waiting sucks. Once a change is detected, the build server instructs an agent to run a build.

Since our repos are large (we include dependencies like NuGet packages, though this is changing), we use what TeamCity calls agent-side checkout. This means the agent does the actual fetching of content directly from the repository, rather than the default of the web server doing the checkout and sending all of the source to the agent. On top of this, we’re using Git mirrors. Mirrors maintain a full repository (one per repo) on the agent. This means the very first time the agent builds a given repository, it’s a full git clone. However, every time after that it’s just a git pull. Without this optimization, we’re talking about a git clone --depth 1, which grabs the current file state and no history — just what we need for a build. With the very small delta we’ve pushed above (like most commits) a git pull of only that delta will always beat the pants off grabbing all of files across the network. That first-build cost is a no-brainer tradeoff.

As I said earlier, there are many projects in this repo (all connected), so we’re really talking about several builds running each commit (5 total):

What’s In The Build?

Okay…what’s that build actually doing? Let’s take a top level look and break it down. Here are the 9 build steps in our development/CI build:

And here’s what the log of the build we triggered above looks like (you can see the full version in a gist here):

Steps 1 & 2: Migrations

The first 2 steps are migrations. In development, we automatically migrate the “Sites” database. This database is our central store that contains the master list of sites and other network-level items like the inbox. This same migration isn’t automatic in production since “should this run be before or after code is deployed?” is a 50/50 question. The second step is what we ran locally, just against dev. In dev, it’s acceptable to be down for a second, but that still shouldn’t happen. In the Meta build, we migrate all production databases. This means Stack Overflow’s database gets new SQL bits minutes before code. We order deploys appropriately.

The important part here is databases are always migrated before code is deployed. Database migrations are a topic all in themselves and something people have expressed interest in, so I detail them a bit more a little later in this post.

Step 3: Finding Moonspeak (Translation)

Due to the structure and limitations of the build process, we have to locate our Moonspeak tooling since we don’t know the location for sure (it changes with each version due to the version being in the path). Okay, what’s Moonspeak? Moonspeak is the codename for our localization tooling. Don’t worry, we’ll cover it in-depth later. The step itself is simple:

echo "##teamcity[setParameter name='system.moonspeaktools'

value='$((get-childitem -directory packages/StackExchange.MoonSpeak.2*).FullName)\tools']"

It’s just grabbing a directory path and setting the system.moonspeaktools TeamCity variable to the result. If you’re curious about all of the various ways to interact with TeamCity’s build, there’s an article here.

Step 4: Translation Dump (JavaScript Edition)

In dev specifically, we run the dump of all of our need-to-be-translated strings in JavaScript for localization. Again the command is pretty simple:

%system.moonspeaktools%\Jerome.exe extract

%system.translationsDumpPath%\artifact-%build.number%-js.{0}.txt en;pt-br;mn-mn;ja;es;ru

".\StackOverflow\Content\Js\*.js;.\StackOverflow\Content\Js\PartialJS\**\*.js"

Phew, that was easy. I don’t know why everyone hates localization. Just kidding, localization sucks here too. Now I don’t want to dive too far into localization because that’s a whole (very long) post on its own, but here are the translation basics:

Strings are surrounded by _s() (regular string) or _m() (markdown) in code. We love _s() and _m(). It’s almost identical for both JavaScript and C#. During the build, we extract these strings by analyzing the JavaScript (with AjaxMin) and C#/Razor (with a custom Roslyn-based build). We take these strings and stick them in files to use for the translators, our community team, and ultimately back into the build later. There’s obviously way more going on - but those are the relevant bits. It’s worth noting here that we’re excited about the proposed Source Generators feature specced for a future Roslyn release. We hope in its final form we’ll be able to re-write this portion of Moonspeak as a much simpler generator while still avoiding as many runtime allocations as possible.

Step 5: MSBuild

This is where most of the magic happens. It’s a single step, but behind the scenes, we’re doing unspeakable things to MSBuild that I’m going to…speak about, I guess. The full .msbuild file is in the earlier Gist. The most relevant section is the description of crazy:

THIS IS HOW WE ROLL:

CompileWeb - ReplaceConfigs - - - - - - BuildViews - - - - - - - - - - - - - PrepareStaticContent

\ /|

'- BundleJavaScript - TranslateJsContent - CompileNode - '

NOTE:

since msbuild requires separate projects for parallel execution of targets, this build file is copied

2 times, the DefaultTargets of each copy is set to one of BuildViews, CompileNode or CompressJSContent.

thus the absence of the DependesOnTarget="ReplaceConfigs" on those _call_ targets

While we maintain 1 copy of the file in the repo, during the build it actually forks into 2 parallel MSBuild processes. We simply copy the file, change the DefaultTargets, and kick it off in parallel here.

The first process is building the ASP.NET MVC views with our custom Roslyn-based build in StackExchange.Precompilation, explained by Samo Prelog here. It’s not only building the views but also plugging in localized strings for each language via switch statements. There’s a hint at how that works a bit further down. We wrote this process for localization, but it turns out controlling the speed and batching of the view builds allows us to be much faster than aspnet_compiler used to be. Rumor is performance has gotten better there lately, though.

The second process is the .less, .css, and .js compilation and minification which involves a few components. First up are the .jsbundle files. They are simple files that look like this example:

{

"items": [ "full-anon.jsbundle", "PartialJS\\full\\*.js", "bounty.js" ]

}

These files are true to their name, they are simply concatenated bundles of files for use further on. This allows us to maintain JavaScript divided up nicely across many files but handle it as one file for the rest of the build. The same bundler code runs as an HTTP handler locally to combine on the fly for local development. This sharing allows us to mimic production as best we can.

After bundling, we have regular old .js files with JavaScript in them. They have letters, numbers, and even some semicolons. They’re delightful. After that, they go through the translator of doom. We think. No one really knows. It’s black magic. Really what happens here isn’t relevant, but we get a full.en.js, full.ru.js, full.pt.js, etc. with the appropriate translations plugged in. It’s the same <filename>.<locale>.js pattern for every file. I’ll do a deep-dive with Samo on the localization post (go vote it up if you’re curious).

After JavaScript translation completes (10-12 seconds), we move on to the Node.js piece of the build. Note: node is not installed on the build servers; we have everything needed inside the repo. Why do we use Node.js? Because it’s the native platform for Less.js and UglifyJS. Once upon a time we used dotLess, but we got tired of maintaining the fork and went with a node build process for faster absorption of new versions.

The node-compile.js is also in the Gist. It’s a simple forking script that sets up n node worker processes to handle the hundreds of files we have (due to having hundreds of sites) with the main thread dishing out work. Files that are identical (e.g. the beta sites) are calculated once then cached, so we don’t do the same work a hundred times. It also does things like add cache breakers on our SVG URLs based on a hash of their contents. Since we also serve the CSS with a cache breaker at the application level, we have a cache-breaker that changes from bottom to top, properly cache-breaking at the client when anything changes. The script can probably be vastly improved (and I’d welcome it), it was just the simplest thing that worked and met our requirements when it was written and hasn’t needed to change much since.

Note: a (totally unintentional) benefit of the cache-breaker calculation has been that we never deploy an incorrect image path in CSS. That situation blows up because we can’t find the file to calculate the hash…and the build fails.

The totality of node-compile’s job is minifying the .js files (in place, not something like .min.js) and turning .less into .css. After that’s done, MSBuild has produced all the output we need to run a fancy schmancy website. Or at least something like Stack Overflow. Note that we’re slightly odd in that we share styles across many site themes, so we’re transforming hundreds of .less files at once. That’s the reason for spawning workers — the number spawned scales based on core count.

Step 6: Translation Dump (C# Edition)

This step we call the transmogulator. It copies all of the to-be-localized strings we use in C# and Razor inside _s() and _m() out so we have the total set to send to the translators. This isn’t a direct extraction, it’s a collection of some custom attributes added when we translated things during compilation in the previous step. This step is just a slightly more complicated version of what’s happening in step #4 for JavaScript. We dump the files in raw .txt files for use later (and as a history of sorts). We also dump the overrides here, where we supply overrides directly on top of what our translators have translated. These are typically community fixes we want to upstream.

I realize a lot of that doesn’t make a ton of sense without going heavily into how the translation system works - which will be a topic for a future post. The basics are: we’re dumping all the strings from our codebase so that people can translate them. When they are translated, they’ll be available for step #5 above in the next build after.

Here’s the entire step:

%system.moonspeaktools%\Transmogulator.exe .\StackOverflow\bin en;pt-br;mn-mn;ja;es;ru

"%system.translationsDumpPath%\artifact-%build.number%.{0}.txt" MoonSpeak

%system.moonspeaktools%\OverrideExporter.exe export "%system.translationConnectionString%"

"%system.translationsDumpPath%"

Step 7: Importing English Strings

One of the weird things to think about in localization is the simplest way to translate is to not special case English. To that end, here we are special casing it. Dammit, we already screwed up. But, by special casing it at build time, we prevent having to special case it later. Almost every string we put in would be correct in English, only needing the translation overrides for multiples and such (e.g. “1 item” vs “2 items”), so we want to immediately import anything added to the English result set so that it’s ready for Stack Overflow as soon as it’s built the first time (e.g. no delay on the translators for deploying a new feature). Ultimately, this step takes the text files created for English in Steps 4 and 6 and turns around and inserts them (into our translations database) for the English entries.

This step also posts all new strings added to a special internal chatroom alerting our translators in all languages so that they can be translated ASAP. Though we don’t want to delay builds and deploys on new strings (they may appear in English for a build and we’re okay with that), we want to minimize it - so we have an alert pipe so to speak. Localization delays are binary: either you wait on all languages or you don’t. We choose faster deploys.

Here’s the call for step 7:

%system.moonspeaktools%\MoonSpeak.Importer.exe "%system.translationConnectionString%"

"%system.translationsDumpPath%\artifact-%build.number%.en.txt" 9 false

"https://teamcity/viewLog.html?buildId=%teamcity.build.id%&tab=buildChangesDiv"

%system.moonspeaktools%\MoonSpeak.Importer.exe "%system.translationConnectionString%"

"%system.translationsDumpPath%\artifact-%build.number%-js.en.txt" 9 false

"https://teamcity/viewLog.html?buildId=%teamcity.build.id%&tab=buildChangesDiv"

Step 8: Deploy Website

Here’s where all of our hard work pays off. Well, the build server’s hard work really…but we’re taking credit. We have one goal here: take our built code and turn it into the active code on all target web servers. This is where you can get really complicated when you really just need to do something simple. What do you really need to perform to deploy updated code to a web server? Three things:

- Stop the website

- Overwrite the files

- Start the website

That’s it. That’s all the major pieces. So let’s get as close to the stupidest, simplest process as we can. Here’s the call for that step, it’s a PowerShell script we pre-deploy on all build agents (with a build) that very rarely changes. We use the same set of scripts for all IIS website deployments, even the Jekyll-based blog. Here are the arguments we pass to the WebsiteDeploy.ps1 script:

-HAProxyServers "%deploy.HAProxy.Servers%"

-HAProxyPort %deploy.HAProxy.Port%

-Servers "%deploy.ServerNames%"

-Backends "%deploy.HAProxy.Backends%"

-Site "%deploy.WebsiteName%"

-Delay %deploy.HAProxy.Delay.IIS%

-DelayBetween %deploy.HAProxy.Delay.BetweenServers%

-WorkingDir "%teamcity.build.workingDir%\%deploy.WebsiteDirectory%"

-ExcludeFolders "%deploy.RoboCopy.ExcludedFolders%"

-ExcludeFiles "%deploy.RoboCopy.ExcludedFiles%"

-ContentSource "%teamcity.build.workingDir%\%deploy.contentSource%"

-ContentSStaticFolder "%deploy.contentSStaticFolder%"

I’ve included script in the Gist here, with all the relevant functions from the profile included for completeness. The meat of the main script is here (lines shortened for fit below, but the complete version is in the Gist):

$ServerSession = Get-ServerSession $s

if ($ServerSession -ne $null)

{

Execute "Server: $s" {

HAProxyPost -Server $s -Action "drain"

# delay between taking a server out and killing the site, so current requests can finish

Delay -Delay $Delay

# kill website in IIS

ToggleSite -ServerSession $ServerSession -Action "stop" -Site $Site

# inform HAProxy this server is down, so we don't come back up immediately

HAProxyPost -Server $s -Action "hdown"

# robocopy!

CopyDirectory -Server $s -Source $WorkingDir -Destination "\\$s\..."

# restart website in IIS

ToggleSite -ServerSession $ServerSession -Action "start" -Site $Site

# stick the site back in HAProxy rotation

HAProxyPost -Server $s -Action "ready"

# session cleanup

$ServerSession | Remove-PSSession

}

}

The steps here are the minimal needed to gracefully update a website, informing the load balancer of what’s happening and impacting users as little as possible. Here’s what happens:

- Tell HAProxy to stop sending new traffic

- Wait a few seconds for all current requests to finish

- Tell IIS to stop the site (

Stop-Website) - Tell HAProxy that this webserver is down (rather than waiting for it to detect)

- Copy the new code (

robocopy) - Tell IIS to start the new site (

Start-Website) - Tell HAProxy this website is ready to come back up

Note that HAProxy doesn’t immediately bring the site back online. It will do so after 3 successful polls, this is a key difference between MAINT and DRAIN in HAProxy. MAINT -> READY assumes the server is instantly up. DRAIN -> READY assumes down. The former has a very nasty effect on ThreadPool growth waiting with the initial slam while things are spinning up.

We repeat the above for all webservers in the build. There’s also a slight pause between each server, all of which is tunable with TeamCity settings.

Now the above is what happens for a single website. In reality, this step deploys twice. The reason why is race conditions. For the best client-side performance, our static assets have headers set to cache for 7 days. We break this cache only when it changes, not on every build. After all, you only need to fetch new CSS, SVGs, or JavaScript if they actually changed. Since cdn.sstatic.net comes from our web tier underneath, here’s what could happen due to the nature of a rolling build:

You hit ny-web01 and get a brand spanking new querystring for the new version. Your browser then hits our CDN at cdn.sstatic.net, which let’s say hits ny-web07…which has the old content. Oh crap, now we have old content cached with the new hash for a hell of a long time. That’s no good, that’s a hard reload to fix, after you purge the CDN. We avoid that by pre-deploying the static assets to another website in IIS specifically serving the CDN. This way sstatic.net gets the content in one rolling deploy, just before the new code issuing new hashes goes out. This means that there is a slight chance that someone will get new static content with an old hash (if they hit a CDN miss for a piece of content that actually changed this build). The big difference is that (rarely hit) problem fixes itself on a page reload, since the hash will change as soon as the new code is running a minute later. It’s a much better direction to fail in.

At the end of this step (in production), 7 of 9 web servers are typically online and serving users. The last 2 will finish their spin-up shortly after. The step takes about 2 minutes for 9 servers. But yay, our code is live! Now we’re free to deploy again for that bug we probably just sent out.

Step 9: New Strings Hook

This dev-only step isn’t particularly interesting, but useful. All it does is call a webhook telling it that some new strings were present in this build if there were any. The hook target triggers an upload to our translation service to tighten the iteration time on translations (similar to our chat mechanism above). It’s last because strictly speaking it’s optional and we don’t want it to interfere.

That’s it. Dev build complete. Put away the rolly chairs and swords.

Tiers

What we covered above was the entire development CI build with all the things™. All of the translation bits are development only because we just need to get the strings once. The meta and production builds are a simpler subset of the steps. Here’s a simple visualization that compares the build steps across tiers:

| Build Step | Dev | Meta | Prod |

|---|---|---|---|

| 1 - Migrate Sites DB | |||

| 2 - Migrate Q&A DBs | |||

| 3 - Find MoonSpeak Tools | |||

| 4 - Translation Dump (JavaScript) | |||

| 5 - MSBuild (Compile Compress and Minify) | |||

| 6 - Translation Dump (C#) | |||

| 7 - Translations Import English Strings | |||

| 8 - Deploy Website | |||

| 9 - New Strings Hook |

What do the tiers really translate to? All of our development sites are on WEB10 and WEB11 servers (under different application pools and websites). Meta runs on WEB10 and WEB11 servers, this is specifically meta.stackexchange.com and meta.stackoverflow.com. Production (all other Q&A sites and metas) like Stack Overflow are on WEB01-WEB09.

Note: we do a chat notification for build as someone goes through the tiers. Here’s me (against all sane judgement) building out some changes at 5:17pm on a Friday. Don’t try this at home, I’m a professional. Sometimes. Not often.

Database Migrations

See? I promised we’d come back to these. To reiterate: if new code is needed to handle the database migrations, it must be deployed first. In practice though, you’re likely dropping a table, or adding a table/column. For the removal case, we remove it from code, deploy, then deploy again (or later) with the drop migration. For the addition case, we would typically add it as nullable or unused in code. If it needs to be not null, a foreign key, etc. we’d do that in a later deploy as well.

The database migrator we use is a very simple repo we could open source, but honestly, there are dozens out there and the “same migration against n databases” is fairly specific. The others are probably much better and ours is very specific to only our needs. The migrator connects to the Sites database, gets the list of databases to run against, and executes all migrations against every one (running multiple databases in parallel). This is done by looking at the passed-in migrations folder and loading it (once) as well as hashing the contents of every file. Each database has a Migrations table that keeps track of what has already been run. It looks like this (descending order):

Note that the above aren’t all in file number order. That’s because 724 and 725 were in a branch for a few days. That’s not an issue, order is not guaranteed. Each migration itself is written to be idempotent, e.g. “don’t try to add the column if it’s already there”, but the specific order isn’t usually relevant. Either they’re all per-feature, or they’re actually going in-order anyway. The migrator respects the GO operator to separate batches and by default runs all migrations in a transaction. The transaction behavior can be changed with a first-line comment in the .sql file: -- no transaction --. Perhaps the most useful explanation to the migrator is the README.md I wrote for it. Here it is in the Gist.

In memory, we compare the list of migrations that already ran to those needing to run then execute what needs running, in file order. If we find the hash of a filename doesn’t match the migration with the same file name in the table, we abort as a safety measure. We can --force to resolve this in the rare cases a migration should have changed (almost always due to developer error). After all migrations have run, we’re done.

Rollbacks. We rarely do them. In fact, I can’t remember ever having done one. We avoid them through the approach in general: we deploy small and often. It’s often quicker to fix code and deploy than reverse a migration, especially across hundreds of databases. We also make development mimic production as often as possible, restoring production data periodically. If we needed to reverse something, we could just push another migration negating whatever we did that went boom. The tooling has no concept of rollback though. Why roll back when you can roll forward?

Localization/Translations (Moonspeak)

This will get its own post, but I wanted to hint at why we do all of this work at compile time. After all, I always advocate strongly for simplicity (yep, even in this 6,000-word blog post - the irony is not lost on me). You should only do something more complicated when you need to do something more complicated. This is one of those cases, for performance. Samo does a lot of work to make our localizations have as little runtime impact as possible. We’ll gladly trade a bit of build complexity to make that happen. While there are options such as .resx files or the new localization in ASP.NET Core 1.0, most of these allocate more than necessary especially with tokenized strings. Here’s what strings look like in our code:

And here’s what that line looks like compiled (via Reflector):  …and most importantly, the compiled implementation:

…and most importantly, the compiled implementation:

Note that we aren’t allocating the entire string together, only the pieces (with most interned). This may seem like a small thing, but at scale that’s a huge number of allocations and a lot of time in a garbage collector. I’m sure that just raises a ton of questions about how Moonspeak works. If so, go vote it up. It’s a big topic in itself, I only wanted to justify the compile-time complication it adds here. To us, it’s worth it.

Building Without Breaking

A question I’m often asked is how we prevent breaks while rolling out new code constantly. Here are some common things we run into and how we avoid them.

- Cache object changes:

- If we have a cache object that totally changes. That’s a new cache key and we let the old one fall out naturally with time.

- If we have a cache object that only changes locally (in-memory): nothing to do. The new app domain doesn’t collide.

- If we have a cache object that changes in redis, then we need to make sure the old and new protobuf signatures are compatible…or change the key.

- Tag Engine:

- The tag engine reloads on every build (currently). This is triggered by checking every minute for a new build hash on the web tier. If one is found, the application

\binand a few configs are downloaded to the Stack Server host process and spun up as a new app domain. This sidesteps the need for a deploy to those boxes and keeps local development setup simple (we run no separate process locally). - This one is changing drastically soon, since reloading every build is way more often that necessary. We’ll be moving to a more traditional deploy-it-when-it-changes model there soon. Possibly using GPUs. Stay tuned.

- The tag engine reloads on every build (currently). This is triggered by checking every minute for a new build hash on the web tier. If one is found, the application

- Renaming SQL objects:

- “Doctor it hurts when I do that!”

- “Don’t do that.”

- We may add and migrate, but a live rename is almost certain to cause an outage of some sort. We don’t do that outside of dev.

- APIs:

- Deploy the new endpoint before the new consumer.

- If changing an existing endpoint, it’s usually across 3 deploys: add (endpoint), migrate (consumer), cleanup (endpoint).

- Bugs:

- Try not to deploy bugs.

- If you screw up, try not to do it the same way twice.

- Accept that crap happens, live, learn, and move on.

That’s all of the major bits of our deployment process. But as always, ask any questions you have in comments below and you’ll get an answer.

I want to take a minute and thank the teams at Stack Overflow here. We build all of this, together. Many people help me review these blog posts before they go out to make sure everything is accurate. The posts are not short, and several people are reviewing them in off-hours because they simply saw a post in chat and wanted to help out. These same people hop into comment threads here, on Reddit, on Hacker News, and other places discussions pop up. They answer questions as they arise or relay them to someone who can answer. They do this on their own, out of a love for the community. I’m tremendously appreciative of their effort and it’s a privilege to work with some of the best programmers and sysadmins in the world. My lovely wife Elise also gives her time to help edit these before they go live. To all of you: thanks.

What’s next? The way this series works is I blog in order of what the community wants to know about most. Going by the Trello board, it looks like Monitoring is the next most interesting topic. So next time expect to learn how we monitor all of the systems here at Stack. I’ll cover how we monitor servers and services as well as the performance of Stack Overflow 24/7 as users see it all over the world. I’ll also cover many of the monitoring tools we’re using and have built; we’ve open sourced several big ones. Thanks for reading this post which ended up way longer than I envisioned and see you next time.

五、Stack Overflow: How We Do Monitoring - 2018 Edition

This is #4 in a very long series of posts on Stack Overflow’s architecture. Previous post (#3): Stack Overflow: How We Do Deployment - 2016 Edition

What is monitoring? As far as I can tell, it means different things to different people. But we more or less agree on the concept. I think. Maybe. Let’s find out! When someone says monitoring, I think of:

…but evidently some people think of other things. Those people are obviously wrong, but let’s continue. When I’m not a walking zombie after reading a 10,000 word blog post some idiot wrote, I see monitoring as the process of keeping an eye on your stuff, like a security guard sitting at a desk full of cameras somewhere. Sometimes they fall asleep–that’s monitoring going down. Sometimes they’re distracted with a doughnut delivery–that’s an upgrade outage. Sometimes the camera is on a loop–I don’t know where I was going with that one, but someone’s probably robbing you. And then you have the fire alarm. You don’t need a human to trigger that. The same applies when a door gets opened, maybe that’s wired to a siren. Or maybe it’s not. Or maybe the siren broke in 1984.

I know what you’re thinking: Nick, what the hell? My point is only that monitoring any application isn’t that much different from monitoring anything else. Some things you can automate. Some things you can’t. Some things have thresholds for which alarms are valid. Sometimes you’ll get those thresholds wrong (especially on holidays). And sometimes, when setting up further automation isn’t quite worth it, you just make using human eyes easier.

What I’ll discuss here is what we do. It’s not the same for everyone. What’s important and “worth it” will be different for almost everyone. As with everything else in life, it’s full of trade-off decisions. Below are the ones we’ve made so far. They’re not perfect. They are evolving. And when new data or priorities arise, we will change earlier decisions when it warrants doing so. That’s how brains are supposed to work.

And once again this post got longer and longer as I wrote it (but a lot of pictures make up that scroll bar!). So, links for your convenience:

- Types of Data

- Logs

- Health Checks

- Metrics

- Alerting

- Bosun

- Grafana

- Client Timings

- MiniProfiler

- Opserver

- Where Do We Go Next?

- Tools Summary

Types of Data

Monitoring generally consists of a few types of data (I’m absolutely arbitrarily making some groups here):

- Logs: Rich, detailed text and data but not awesome for alerting

- Metrics: Tagged numbers of data for telemetry–good for alerting, but lacking in detail

- Health Checks: Is it up? Is it down? Is it sideways? Very specific, often for alerting

- Profiling: Performance data from the application to see how long things are taking